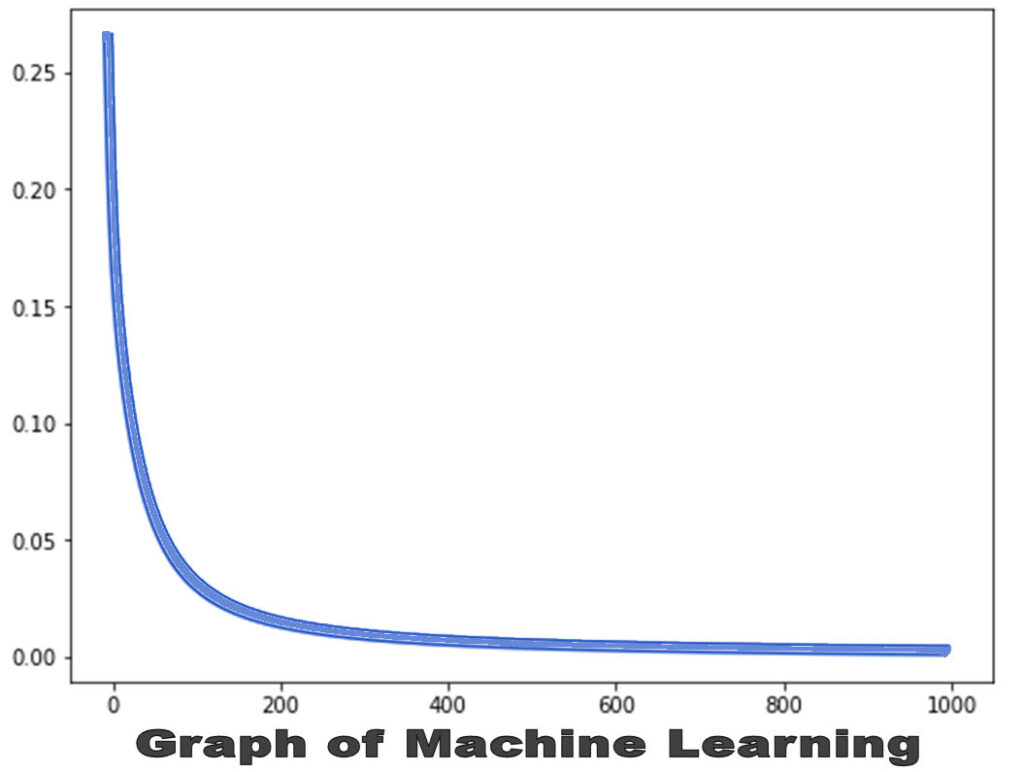

To leverage time, an AI system has to learn at a geometric rate, but the AI systems of today’s BigTech uses decades old technology that works in linear time. The learning behavior of BigTech neural networks works by a process of “gradient descent”, which in simple terms is a calculation cycle that moves a learning “error target” towards a smaller and smaller error value, a process of ever-decreasing learning for each learning cycle:

Because of this, the AI systems used by academia and BigTech will always be stuck in linear time.

How does geometric learning work?

Our biological brains learn by using the power of geometric learning. It can be pictured using a simple example of multiplying pennies.

The new AI technology from the First Frontier Project learns at the same geometric rate, and only the AI technology from the First Frontier Project can demonstrate time leveraging.

Time-leveraged AI makes possible many so-called “enabled technologies”, which are technological innovations that are only possible with the supreme leveraging that geometric learning provides.

These innovations include new, truly interactive social media platforms, or an entirely novel, 3D graphics-based communication media, and even inventive thermodynamic molecular synthesis approaches which can unravel the secrets to new energy sources.

Of course, there will be advances in many other areas such as education, medical imaging, weather prediction and real-time global shipping and trade. Enabled technologies opens the door to the grander evolutions in our information age, developments that BigTech keeps promising, but they have never delivered.